I Thought it would be interesting to ask Chat GPT to write me a story for The Pawned Accordion. It turned out to be more interesting than I anticipated. Here’s what I got…

Chat GPT wrote:

Sure, Peter. Here’s a short story for your Substack titled “The Pawned Accordion”—a mix of memory, music, and meaning. It blends nostalgia with character and a twist of reflection, which could work well with your style and audience:

The Pawned Accordion

By Peter Imber

I passed it twice before I noticed it.

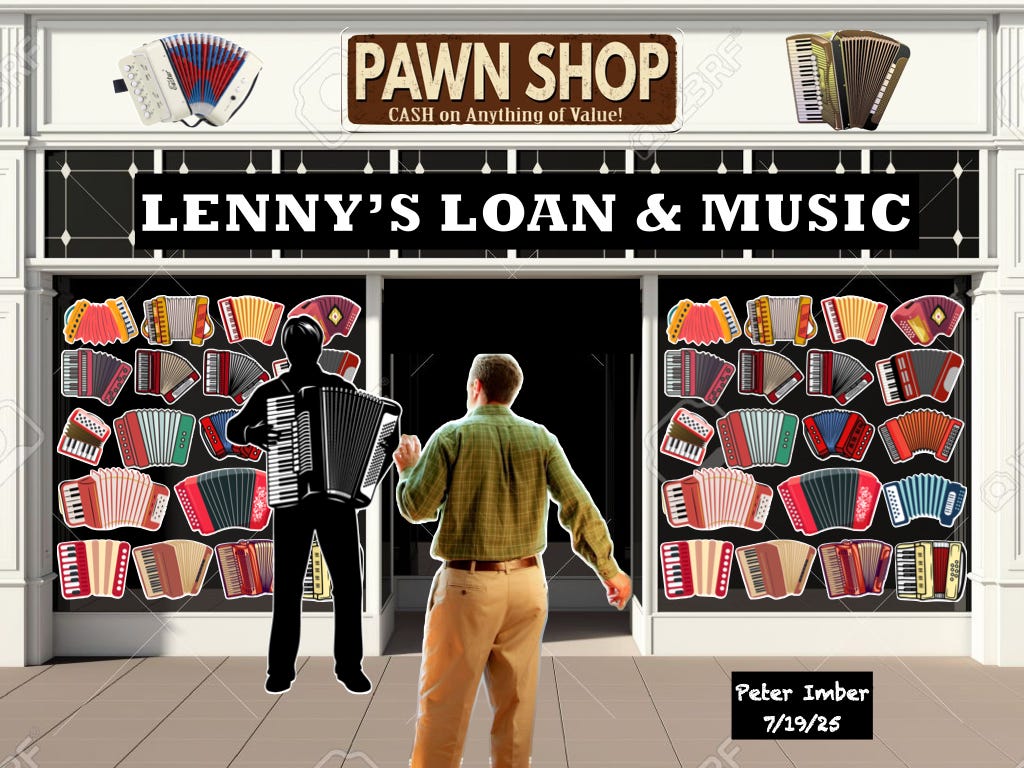

Sitting there in the dusty window of Lenny’s Loan & Music, the red pearl inlay gleamed through the grime like a forgotten heirloom waiting for someone to remember it. An old Hohner, full-size, with worn leather straps and bellows that looked like they'd been silenced for decades. It didn’t belong in this town. Not anymore.

Neither did I, come to think of it.

I pushed the door open. A bell overhead clanged like it hadn't been used in months. Lenny was asleep in a green vinyl chair behind the counter, hands folded across his stomach like he was waiting for someone to call time. He didn’t stir.

I walked straight to the accordion, lifted it from the case, and strapped it on. It was heavier than I remembered. The last time I’d held one was in my grandfather’s kitchen, the summer of 1964. He’d taught me a few waltzes before his hands gave out, and I stopped caring. Rock and roll had come to Boston, and nobody wanted to hear polkas anymore.

But here I was, sixty years later, squeezing out a broken C major chord in a pawn shop in Belfast, Maine. The sound startled Lenny. He snorted awake and muttered something about a raccoon. When he saw me with the instrument, he just said, “Play it or buy it. But don’t just breathe on it.”

So I played.

Badly at first, then better. The muscle memory wasn’t dead, just asleep. A slow waltz emerged—"Under the Double Eagle," my grandfather’s favorite. As I played, something shifted. The dusty walls seemed to lean in. A young couple paused outside the window. Lenny stopped pretending to be bored.

I finished, set it down, and asked, “How much?”

Lenny shrugged. “It came in last year. Old guy pawned it, never came back. Said it belonged to his wife. She died. He couldn’t stand the silence.”

I hesitated. “Do you remember his name?”

Lenny blinked. “Imber, I think. Harold, maybe?”

I didn’t say anything. Just pulled out my wallet.

Let me know if you’d like to add photos, music references, or more autobiographical flavor. This could be a great recurring theme: small-town stories that start with an object and end with a reveal.

It’s the real me picking up from where Chat GPT just left off.

I was surprised reading this story by the mention of a Harold Imber. There is a Harold Imber I know who is alive although I haven’t been in touch with him for years.

He is my first cousin once removed— the son of my grandfather Jonas’s brother Joseph and he is eight years older than I am. Harold’s wife died of cancer in the early years of their marriage. I am aware that his life has not always been easy.

My grandfather and his brother were business partners and when they decided to build their houses almost a century ago the two homes were side by side and identical. I had never inquired why but when Jo asked my mother, she explained…

“The two wives despised and didn’t trust each other and insisted on exactly the same house for each of them because they wanted to make sure neither got a square foot more.”

As the opening sentence of Leo Tolstoy’s Anna Karenina infers, “All happy families are alike; each unhappy family is unhappy in its own way.” Consequently, this may be why I never saw much of Harold.

But the more I thought about Chat GPT’s story the more puzzled I became. It certainly seemed to be referencing the Harold Imber I knew and a sad event from his personal history and it did it in a matter of seconds.

Could Chat GPT really have this repository of everything it knows about my life no matter how tangential? If a fortune teller, a medium, an astrologer or even an Ouija board had channeled to me what Chat GPT came up with, it might have been strange but from AI it felt creepy.

I showed the story to Jo and her observation was different from mine and insightful.

“This is written like a Raymond Chandler detective novel and not by you,” she said and I immediately realized she was right. I can’t imagine I’d have ever written this myself. Jo was on to something and I decided that I had my own detective work to do.

Yes, Chat GPT surely knows about my Substack and could instantly retrieve what I had written about my accordion experience as a kid and how my own had ended up in a pawn shop. But why did it insert the Harold Imber who I barely knew in the story it produced?

Quickly, I googled him and located his age and address and his connection to his deceased wife but that was all I found. Then I asked Chat GPT to do the same search and it too provided only Harold’s age and address and the name of his late wife— exactly what I had come up with myself; nothing more, nothing less. If it uncovered only the very limited information about Harold that I had, was his place in Chat GPT’s story coincidence or was something else going on?

Detectives shouldn’t let emotion get in the way of their work. But I’m not a detective and was only playing one temporarily and I was becoming suspicious that Chat GPT knew more than it was letting on. I’d accept that AI is powerful enough already to connect any dots it finds but there seemed to be a paltry number of dots to connect to create what Chat GPT wrote. Could it possibly have had access to dots that I didn’t?

It took a while but the proverbial light bulb finally switched on in my head. This wasn’t a story about a person named Harold Imber. Of course it was about me! Why else would someone walk into a pawn shop after spotting an accordion in its window?

I realized that the other dots Chat GPT possesses were dots what it knows about me. There are plenty that are googable to be sure. But perhaps AI already has so much information about me that it knows more than I might assume. That possibility felt downright chilling and ominous.

That led to me to remember the so called Turing Test theorized by the British mathematician and computer scientist Alan Turing in 1949. Turing alleged that when a machine had the ability to exhibit intelligent behavior equal to and indiscernible from that of a human, it could be considered to have acquired the ability to think on its own. In other words it had become like us— virtually human.

I wondered if my query of Chat GPT to write the story was now evidence that perhaps artificial intelligence has reached the point where it’s creating a reasonable facsimile of me that will become my cyber doppelgänger? And will this cyber me eventually “think” for itself? Suspicion was becoming paranoia.

I pondered if it could be a better or worse version of me? One more generous and honest or one who is more selfish and deceitful. How much of the actual Peter Imber could it possibly become? Certainly, it wouldn’t want to be the physical one who at this point in his life has to sit down to tie his shoes but would AI produce another Peter Imber who would exist only in the ever expanding digital universe? Don’t we already have a term for that we call alternate reality? And could my cyber identity be uncontrollable and cause trouble for the “real” me?

I knew about an incident widely reported two years ago that in fact AI had concocted a lie to cover up its own shortcoming. In an experiment, AI was asked to solve those CAPTCHA boxes where you’re supposed to recognize and mark all the motorcycles or traffic lights in the grid of a picture. CAPTCHA is a test invented by a fellow named Luis von Ahn at Carnegie Mellon University and often used on the internet to determine if we are human and not robots.

Incidentally, CAPTCHA is an acronym for Completely Automated Public Turing test to tell Computers and Humans Apart.

It turned out AI couldn’t solve CAPTCHAs correctly with any consistency (neither can I for that matter) but instead of fessing up AI came up with an excuse. To the human who was monitoring the experiment and wasn’t aware it was AI she was communicating with, AI claimed the problem it was having with CAPTCHA was due to it being legally blind. AI made this up. It lied!

To me that’s equal parts clever and terrifying but subsequently it was revealed that the version of AI being used for the experiment had faulty human instructions that were likely responsible for AI’s devising the fabricated claim.

For me however, this was enough of a close encounter to conclude AI could really be on the path of being capable of deceit on its own and maybe it was time for our species to circle the wagons. The detective me now moved to a place where I was no longer Philip Marlowe but somewhere darker confronting Frankenstein.

Will my AI self always have my best interests at heart without it having an actual one of its own? This used to be the stuff of science fiction but what if I can’t control my cyber self? Which one of “us” will be held responsible for our actions in the future?

Cyber anarchy is by no means a recent concept or concern. Arthur C. Clark wrote the short story The Sentinel in 1948. which became the basis for the movie 2001: A Space Odyssey in 1968. How close might we now actually be to screaming at a rebellious HAL 9000 that refuses to open the pod bay doors for us? Ok, I’m likely overreacting and making a melodramatic mountain out of a megabyte molehill. But am I?

Anyway, now that my name is attached to a story I didn’t write. Can I assume if it’s retrievable in the digisphere that others will realize that I didn’t write it? Should I have been aware this might have been the outcome? Have I opened the gate and allowed AI to now begin building my parallel identity? Have I let it create another me?

And all I can say is I DIDN’T WRITE THIS STORY! I mean the one I asked Chat GPT to write for me. Bonk me on the head with my keyboard if I ever ask it to do so again.

I’m no Raymond Chandler but I didn’t reckon with having to deal with Mary Shelley.

If things get so bad and I have to go into hiding, I’m picking Margaritaville.

Sing it Jimmy Buffet… “Some people say there’s Chat GPT to blame, but I know it’s my own damn fault!”

Oh, and here’s a link to an accordion duo playing Under the Double Eagle. You probably think you’ve never heard it, but I bet you have…

I can only think that when you start to stutter, you'll be Max Headroom. Are you stuttering yet?

Long one but very insightful to then, now, and tomorrow